Robust A/B Testing Agenda Gives KLRN Insight into Better Email Practices

Robust A/B Testing Agenda Gives KLRN Insight into Better Email Practices

More than a year ago, San Antonio member station KLRN began experimenting with A/B testing in its monthly renewal emails. “We adopted an attitude of capitalizing, any chance we could, on the opportunity to test campaigns,” says webmaster Patrick Driscoll. “We’ve done a series of these, once a month.”

He says the station’s membership department has always operated based on assumptions from past experience with members. The testing gives the team real data to either confirm or rethink those assumptions. “It’s kind of like walking into a dark room and feeling your way around,” Driscoll says. “We’ll start accumulating knowledge about our audience.”

Here’s how Driscoll designs the testing:

- Pick a testable question related to email content, email delivery or the donation funnel. For instance: Would including a member’s most recent giving amount in the email message get a better response? Would including a button rather than a simple text link increase clicks?

- Change one variable in each email to answer the specific question. Keep all other elements the same.

- Randomly split the audience into two equal groups. While Driscoll uses a Python script to do that, “this can also be done in Excel,” he says.

- Keep tabs on the separate groups’ responses. “Make sure your email and donation funnel are set up to track the two groups separately,” he says.

- Following the campaign, apply a statistical test to determine if results are significantly different between the two groups. Driscoll runs this test using an Excel spreadsheet created by Visual Website Optimizer.

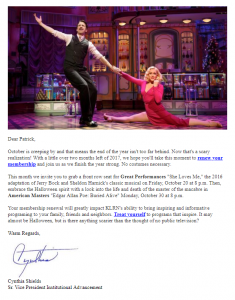

He explains how he applied this process to a recent monthly renewal email. “We were in a hurry to get everything put together” for the email, Driscoll says. While drafting the email, the writer accidentally left out a sentence that mentioned the recipient’s most recent giving amount. That gave Driscoll and team the idea to use the missing data as an A/B test option.

For the “B” group of emails, KLRN left out the recipient’s giving amount. For the “A” group, the station included the amount as usual.

“It turned out to be the most significant test we’ve done,” he says. The emails identifying the past giving amount doubled the number of clicks and donations over the other group. Results indicated with 98 percent certainty that including personalized giving amounts in renewal emails garners better responses.

“It turns out to be a really big deal,” he says. “People were twice as likely to click through to the donation page, and twice as likely to make a donation.”

Driscoll has tried additional tests. In one, he found that a button link actually gets fewer clicks than a simple text-based link. In another, designing the email with a large banner photo resulted in more opens, but ended up gathering fewer total donations from those opens.

He says the station has long tried to avoid flashy renewal emails, believing that simpler, text-based emails would be better received by recipients. These tests—like the one in which a button link performed worse than a text link—have confirmed that hunch. “I was so surprised by that one I ran the test two times,” he says. “I can only guess what people are thinking, but sometimes splashy graphics and loud headlines can look pretty spammy. Some emails you get a feeling right away that you don’t want to try to read it. People like email to feel like it’s more personal.”

Every month, Driscoll and team come up with another element to test or segment in these renewal emails. In the process, they’re developing a list of best practices to follow as they communicate with members. Each month’s findings inform how KLRN drafts emails and when those emails are sent.

“We never pass up the chance to do a test,” Driscoll says.