Thinking Beyond Screens #5: PBS is Using AI Right Now. Are You?

If you've read the innovation blogs over the past month, you might be thinking "this all sounds good, but where do I start?"

In this edition of Thinking Beyond Screens, Mikey Centrella, PBS' Director of Innovation, looks toward the future of AI in public media and provides an array of resources stations can take advantage of today.

● ● ● ● ●

PBS is Using AI Right Now. Are You?

For over 15 years, PBS has been leveraging cloud services to create on-demand viewing solutions available to a wide audience. Getting clean accessible data in the cloud sets up the building blocks for AI tools. It allows PBS to experiment more easily, without investing a tremendous amount of money up front.

The PBS Innovation Team was established in 2020 to take PBS’s product experimentation that one step further. I have had the wonderful opportunity to be a part of this team and share our work during public media conferences and summits. I have met many public media professionals who dedicate their time to the craft and am continually impressed by the goodness within those who I have met along the way. As a system, we really are a fantastic group.

The innovation team's role at PBS is about leveraging cutting-edge technologies to differentiate public media. We build AI, ML, AR, and VR prototypes aimed at honing a technological edge for improving content delivery, complementing PBS primary strength in content differentiation. Looking for new ways to serve our viewers, stations, and each other. Here are a few videos about AI that the innovation team has been working on:

This innovation team at PBS is driven by a couple of key values. First we love to experiment. Asking questions and testing hypotheses inspires us to be creative. Second, from prototyping to product deployment, we believe in iterative but constant improvements. For us, success is measured in the degrees to which we can help PBS and its member stations use emerging technologies to make our media more accessible to more people. And one of the newest tools in our toolbox is AI.

The PBS product teams optimize until we know something works and meets the interests of viewers, delivering on the mission of being a trusted window to the world, from any device in America. And AI is just the latest in a long line of opportunities born from our mission of universal service.

Over the past few months, some of you have reached out to extend the conversations further and I have very much enjoyed it. If you have seen me speak, you have likely heard me share everything I know about technology and AI. If you have not, please feel free to invite me to do so if you are curious. I would welcome that. Knowledge sharing is how we support our collective skills.

Throughout my conversations, I have received questions and clarifications about guidelines and best practices and so I wanted to share that here and now.

So How is PBS Using AI Today?

We have been experimenting with AI models that can be hosted on our own servers, thus allowing us to own the data and be sure that our information stays private.

We view ML, AI and generative AI as tools that can help us engage and retain viewers. So our digital product teams are venturing further into exciting new innovations in personalization and discovery. Recently, we have been exploring how to integrate AI models’ embeddings into the data lake to help generate content metadata for powering search recommendation engines. Good results start with high quality, curated, protected data.

We use our established AWS cloud infrastructure to service AI in our “walled garden”. That means we control how our data is used, who has access to it, and where it goes. There are many open source models such as Llama and the HuggingFace platform which can be accessed through AWS Bedrock. We also experiment with free tools and cutting edge models, purely for research.

The PBS product teams are testing use cases for how we can leverage automation and prediction systems to drive greater engagement, increase conversions, mitigate churn, sustain and grow station revenues. There are so many more use cases, from production to creative, marketing to fundraising. Here is one example use case of AI generated content for PBS that could help surface content from our library and share it with users in a more personalized manner:

We have a lot of travel shows, but they're currently housed under the "Culture" genre, so they're harder to find within our system. But, if AI searches the library, identifies travel content, creates a new tag and classification, then compiles and output for a row on our apps such as "10 travel shows on PBS you have to watch", there would be a lot of value there, both for SEO (discovery) and for current viewers (retention).

Generative AI can also be leveraged to understand viewers and donors preferences, offering the ability to present a 360 view of all interactions, which could trigger more targeted, contextual, and personalized content.

PBS AI Projects in the works include:

- Recommendation engine(s)

- Automation in the broadcast center & cloud

- PBS Kids research

- Writing in the brand voice: Headlines, Fundraising appeals, Emails

- Metadata extraction & creation

- RAG chatbots: Support & Hub Chatbot

- Image alt tag generation

- Quiz generators

- Dynamic video recaps

- Face swapping tech

- Search Enhancements

- C2PA

- Video screening

- Prospect Automation

- Fundraising Modeling

- Cyber Security Triage

If a member station or public media organization is interested in working with AI for operational workflows in the cloud, but is concerned about data privacy, hosting a model through AWS using bedrock is the best viable option and we would be happy to advise and share our best practices.

And here are some of the recommended best practices.

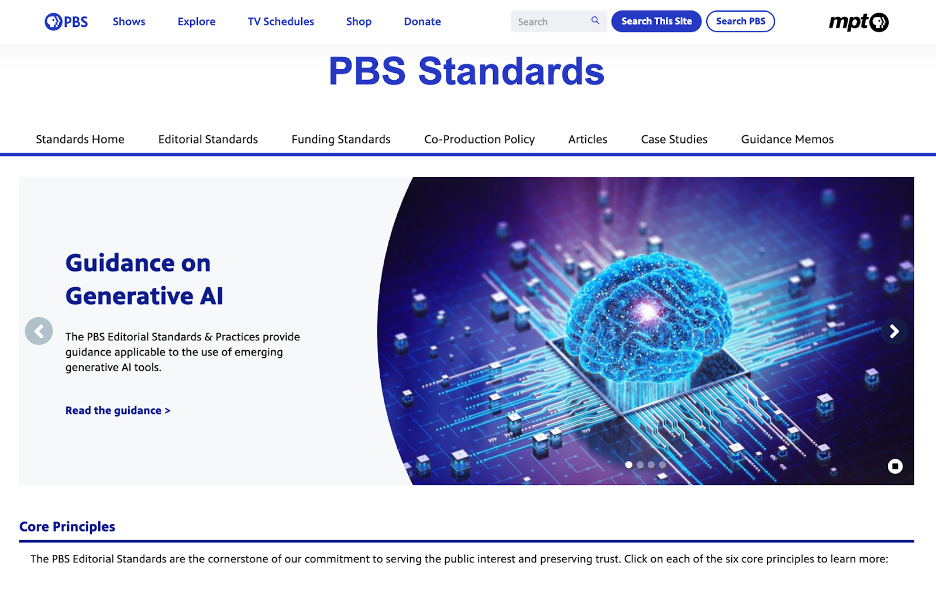

What are the guidelines from PBS?

While generative AI is a huge advancement, it is important to say that GenAI isn’t perfect. It is really great at summarization and recognizing patterns.

But even with these incredible advances with LLMs and GPTs, the techniques have serious limitations as they are rooted in the old way of machine learning development. AI today is nowhere close to what people can do - in terms of breadth and depth of human perception, reasoning, communication and creativity. And getting AI to be 99.99% accurate is exponentially harder than 90%.

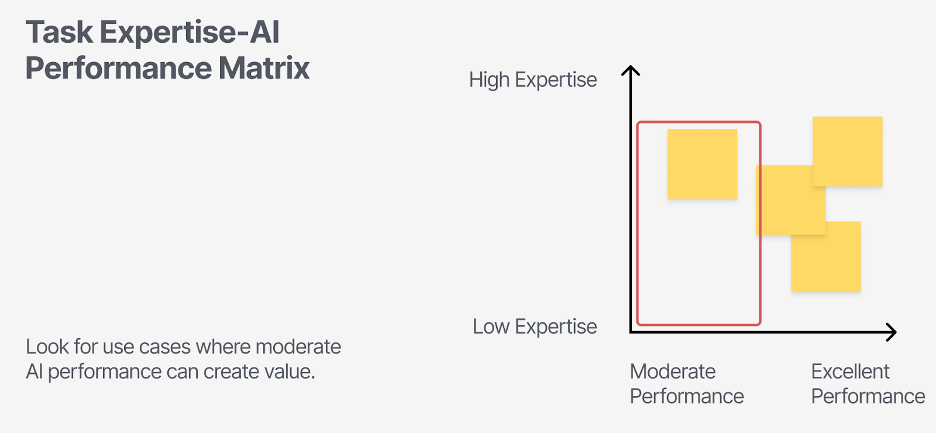

Have you ever had a friend finishing your sentence for you? They think they know exactly what you are thinking and about to say, and are quick to respond, but sometimes they get it totally wrong. That is sort of how GenAI is working right now. If you want to be precise don’t use genAI. If you want to be accurate don’t use GenAI. Its sweet spot is moderate expertise and low accuracy as shown on the graph.

Crunching a lot of data does not translate into knowledge. And making complex calculations with human interfaces does not equal autonomy. For machines to be truly autonomous, we need to develop a new scientific approach. But perhaps that is a different blog post. In short, if you are using AI, be aware that it isn’t perfect yet.

Here are some guidelines to follow:

1. If you are going to use the free tools, never upload any proprietary, sensitive, or confidential information, or PII of viewers or donors.

2. If you use generative AI to create new content in production or editorial (or if you hire an external party like a production company who might), that makes it not copyrightable.

3. Please follow the PBS editorial standards.

Here is a quick summary as they apply to specific AI tools:

- Text or image generation for inspiration is okay, but do not copy/paste

- Image generation for backgrounds and b-roll is a maybe:

- Hire experts to review and put a lower third or labels for the audience that explains the use of AI.

- Voice AI or face manipulation is a no: unless it is used for anonymizing interviewees

- Hire experts to review and put a lower third or labels for the audience that explains the use of AI.

- Voice AI for interview edits or narration is no

- Generative fill is no

- Using AI stock libraries is no

- Video and music is no

- Questions about usages? Email standards@pbs.org

What about Marketing?

- Text or image generation for inspiration is okay, but do not copy/paste

- Text for summarization/description/tags/headline ideas is okay, but fact check

- Image generation is maybe

- Hire experts to review and reference the use of AI in the alt tag for the image

- Questions about usages? Email innovation@pbs.org

So does that mean the AI tools of today are useless? No. What it means is that we have to understand the limitations of the tools and opportunity to use them for what they are good at. If the amount of accuracy they can or cannot provide is limited, what can it help us with? How can we use them to enhance not replace our work?

Will AI Take Our Jobs Away?

AI won’t displace jobs. Today’s AI tools cannot replace unskilled or highly skilled labor today. A robot cannot clean off the dinner table, and an algorithm can not be a physician. Humans possess the ability to understand the nuanced context and cultural relevance of a 3-dimensional world in ways that AI currently cannot match. This is crucial for creativity and editorial judgment. These are things require that human expertise that AI lacks:

- Making editorial decisions on how to use generated content in new productions.

- Making decisions about sensitive or controversial content.

- Ensuring that content is used appropriately and ethically.

- Balancing business interests with journalistic integrity and public trust.

- Contextualizing content within broader historical or cultural narratives.

- Ensuring diverse perspectives are represented in content.

Humans also harness interpersonal communication and collaboration in the workplace, like maintaining relationships with content creators and producers, or negotiating rights and permissions in coordinating between different departments (legal, production, marketing).

So what about journalism?

The reality is AI can help, not hurt newsrooms by transforming and reshaping the way reporters, editors, marketers, product professionals and managers do the work. AI can positively impact both the editorial to the business side as well as the supply chain of news gathering, production, distribution and investigative journalism.

Here is an example. A friend at the Wall Street Journal told me they are using ML and AI to do many things like: Scanning and summarizing docs, analysis of social media conversations, translations in languages and with context, transcribing audio from person on the street interviews, making quizzes, and more. But what really impressed me is how they are using genAI as a tool to aid investigative journalism; by using image recognition, a form of GenAI, they analyzed data from Google Maps to identify electrical wires that may have lead casing.

The journalism industry must keep pace by identifying effective use cases from AI tools, developing best practices for integration and encouraging collaboration. Newsrooms can use AI today for things like:

- Reporting and News Production

- Searching knowledge bases

- Summarizing internal docs

- Fact-checking

- Transcribing interviews and footage

Investigative Journalism

- Product and Distribution

- Translating reporting into many languages

- Generate news quizzes

- SEO and aIt text image generators

- Personalized content and news updates

- Comment moderation

- Chatbots for support and search

But just remember that all of this requires human oversight. We need to provide critical thinking. And we need to ensure the principles of journalism for this work - vetting, rigor, reader first, truth and transparency, etc.

What Is Next? What Should We Be Doing With AI?

How can AI and immersive technologies transform storytelling in public media in 2025? We are now two years into generative AI tools and is anyone using it for more than just answering questions and having a dialogue?

The reality is that most people are not yet empowered, let alone trained to realize either the limitations or the capabilities of these tools. But if we can embrace that, and see machines as collaborative tools, there are a lot of ways we can go. When I think about the future we could create with AI, five things come to mind.

AI-Powered Personalized Narrative Experiences

Could it be possible to provide adaptive storytelling algorithms that adjust content based on viewer engagement and preferences? Obviously there are ethical considerations, and we as public media need to maintain editorial integrity. But personalizing video content will soon become the new normal. Here is an example:

My son sometimes gets really turned off by dramatic music in PBS shows and doesn’t love violent scenes - even if it's an educational documentary about animals. What if there was a way for me to request an uplifting music track matched with optimistic imagery? That future will be coming soon on other platforms. Should public media explore it too?

Immersive Journalism and Investigative AI Reports

AI is really great at pattern recognition and summarization. And immersion tells a more robust story. There are already examples of how new organizations are using AI for investigative reports. By taking satellite imagery or public data and having machine learning crunch the numbers to find the patterns. Using AI to analyze complex datasets and create compelling, interactive visualizations. Could we be using AR to transport viewers into news events and documentary settings with 360-degree video and spatial audio in local reporting to literally transport someone to the event? Or help students see a 3d model of an object with educational overlay in their living room instead of a planetarium?

AI-Enhanced Interactives & Games

Games take time to produce, but AI can help with the heavy lifting. Or it can be used to craft parts of the experience. Or be the moderator. Gamification elements in educational content, powered by AI and immersive tech will make substantial differences in how we produce and release interactive experiences.

Live Events and Community Engagement

AI-powered real-time audience interaction during live streams and virtual events with augmented reality overlays for in-person community events and educational workshops will be a game changer for the public media industry. Town halls, events and screenings, can be hybrid and interactive with the help of new technologies.

Accessibility and Inclusion Through Technology

AI can be used to improve closed captioning, audio descriptions, and make translations in real-time. Imagine watching TV and hitting a button to switch the audio to another language, but not just over dubs, the lips and facial features would adapt too. Maybe even the words in the background!

The potential for AI and immersive tech to amplify public media's mission and impact should be a call to action for public media organizations to embrace and shape these technologies responsibly.

Have great ideas for AI? I want to hear from you!

I would love to know how you are using AI and what tools you are finding useful. Or if you think PBS should be looking into building its own AI platform for something special, tell me! Please reach out and let us know what’s on your mind. We are here to help!

PS: Below is a list of tools we find useful and may be helpful to you.

Resources and Tools

Video

ChatBots

Spreadsheets

- Equals: For startups

- Numerous AI: Plugin to Excel

Slide Decks

Music

Meetings

Legal

- HarveyAI: Best option of the moment

Image Generation

- Stable Diffusion: Top used at the moment

- Dalle 3: Generate images with ChatGPT

- Midjourney: A go to image generator

- Getty

- Shutterstock

- Canva

For Designers

- Adobe Firefly: Embedded in the platforms that creatives use

- Figma: New features that will design for you

For Developers

- Amazon Bedrock: Best way to deploy models

- Hugging Face: Platform and our favorite model

Video

- Vidyo: Turn long for video to short form

Podcast

- Wondercraft: Turn your script into a podcast

Text Generators

- Byword: SEO

- Writer.com: Copywriter

- Jasper: Marketing

Audio

- Play.ht: Off the shelf voice options in many languages

- ElevenLabs: AI voices, translations and video

- Adobe Podcasts: A good audio clean up tool

- Descript: Video/audio editing tool

PDFs

- ChatwithPDF: Talk to your PDF

Machine Learning and Data Modeling How-To's

- A Complete Guide to Machine Learning Models: Leveraging data to help users discovering new content

- Linear Regression in Excel: a 4-step guide

- Association Rules: guide

- Kaggle Intro to Machine Learning

- Datacamp: Learn to code

MarTech

PS. Do you have a great idea? Please share! The PBS innovation team is looking for partners to design, build and launch experiments with emerging technology. Submit your suggestions here: innovation@pbs.org.